- The AI Edge

- Posts

- Apple Faces Backlash Over AI-Generated News Errors

Apple Faces Backlash Over AI-Generated News Errors

Automate Prospecting Local Businesses With Our AI BDR

Struggling to identify local prospects? Our AI BDR Ava taps into a database of 200M+ local Google businesses and does fully autonomous outreach—so you can focus on closing deals, not chasing leads.

Ava operates within the Artisan platform, which consolidates every tool you need for outbound:

300M+ High-Quality B2B Prospects

Automated Lead Enrichment With 10+ Data Sources Included

Full Email Deliverability Management

Personalization Waterfall using LinkedIn, Twitter, Web Scraping & More

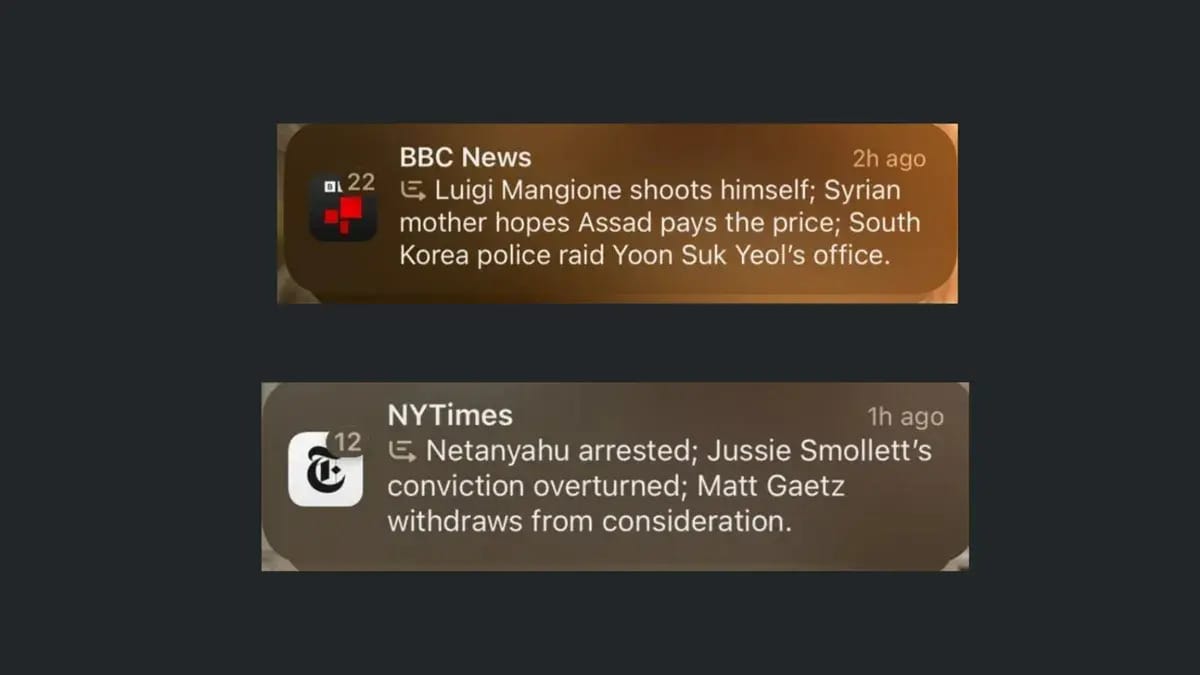

Apple is facing criticism after its AI tool, Apple Intelligence, created a false news headline about a high-profile murder case. The AI-generated notification, attributed to BBC News, wrongly claimed that Luigi Mangione, the accused in the murder of a prominent healthcare insurance CEO in New York, had shot himself. This event never happened, yet the error quickly spread online, causing confusion and concern.

BBC News formally complained to Apple, demanding action to prevent such mistakes in the future. In a statement, the BBC emphasized the importance of accuracy in news reporting, highlighting how false information damages trust and credibility. Media organizations, which invest heavily in maintaining their reputation, view such errors as a serious threat to public trust in journalism.

This isn’t the first time Apple Intelligence has been criticized. Last month, another AI-generated notification misrepresented a story involving Israeli Prime Minister Benjamin Netanyahu. The summary incorrectly stated he was arrested, while the actual news was about the International Criminal Court issuing an arrest warrant. These incidents have sparked concerns about the reliability of AI in delivering accurate news.

Calls for Action

Reporters Without Borders (RSF) has called for Apple to suspend the generative AI feature. RSF argues that AI tools are not yet reliable enough for news reporting, warning that mistakes like these can harm public understanding and media credibility. RSF’s Head of Technology and Journalism stated, “AIs are probability machines, and facts can’t be decided by a roll of the dice. This feature should be removed until stronger safeguards are in place.”

The group also highlighted the need for stricter global regulations on AI systems, pointing out that current laws, like the European AI Act, don’t classify such tools as high-risk.

The Bigger Picture

The errors made by Apple Intelligence reveal broader challenges with AI in sensitive fields like journalism. While AI can make news delivery faster and more convenient, it often struggles with context and nuance—essential for accurate reporting. When trusted sources are misrepresented, misinformation spreads rapidly, creating confusion and damaging trust.

Tech companies like Apple face growing pressure to test and monitor AI systems more thoroughly. Meanwhile, news organizations are pushing back against errors that harm their reputations.

Thanks for Reading!

That’s a wrap for this edition!

What’s on your mind?

Got an idea or something you want to see in our next newsletter? Share it with us at [email protected]—we’re here to bring your ideas to life!

Did you find value in today’s newsletter? Your feedback helps us create better content for you!

❤️ Loved It!

😎 It was awesome!

😞 Not for me!

We can’t wait to hear your thoughts & Want to discover even more newsletter? Just Follow this link to find some of our favorites.